I am currently working on a project developing an AI Chatbot for “really smart people” – medical researchers. This audience is intellectually rich and time poor. An extra layer sitting on top of this complex customer experience setting are the various regulations (and absence of regulations) around the use of Artificial Intelligence in science, health, government, and knowledge generation. One of the greatest issues with AI is trust. Lucky I have so much experience with customer trust in both my professional and academic life! I feel very comfortable talking about trust, or the lack thereof, in AI.

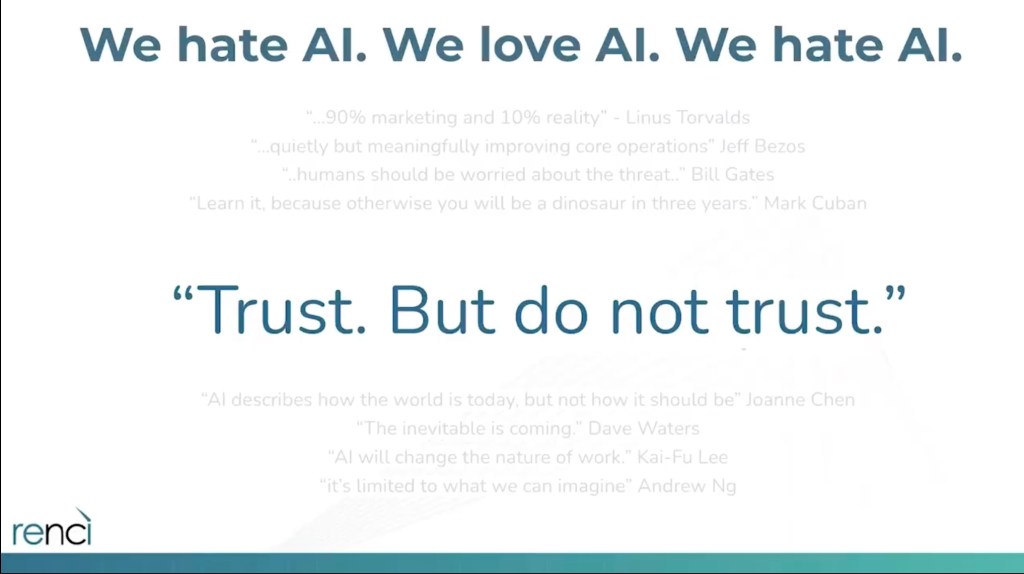

One of the most resonant part of the presentation I recently gave is that the key phrase with AI is commonly : “Trust but verify”.

Simply put this means, with AI: do not trust.

How can we make AI more trustworthy? is the questions a lot of scientists are asking. This presentation that I recently gave at an APRA-H asks (and answers) a more nuanced question: considering the current environment, how can we enable the customer to know when they can trust us?